Human Performance Limitations in Medicine: A Cognitive Focus

By Bob Baron, PhD

Part 1 of 2

Abstract

Medicine has traditionally been one of the most cognitively demanding occupations. This paper discusses the limitations of human performance in the hospital environment. Human factors models are presented and used as an anchor for a randomly selected case study involving a potentially lethal medication error. The case study’s root cause analysis showed five distinct factors that were causal to the error. The human factors models, in conjunction with an overview of basic human cognition, provide the reader with the tools to understand all five findings of the case study. This paper will provide a foundation for improving medical safety by creating an awareness of the factors that influence errors in medical procedures.

A 55-year-old man with acute myelogenous leukemia and several recent hospitalizations for fever and neutropenia presented to the emergency department (ED) with fever and hypotension. After assessment by the emergency physician, and administration of intravenous crystalloid and empiric broad-spectrum antibiotics, the patient was assessed by his oncologist. Based on the patient’s several recent admissions and the results of a blood culture drawn during the last admission, the oncologist added an order for Diflucan (fluconazole) 100 mg IV to cover a possible fungal infection.

Because intravenous fluconazole was not kept in the ED, the nurse phoned the pharmacy to send the medication as soon as possible. A 50 mL bottle of Diprivan (propofol, an intravenous sedative-hypnotic commonly used in anesthesia) that had been mistakenly labeled in the pharmacy as “Diflucan 100 mg/50 mL” was sent to the emergency department. Because the nurse also worked in the medical intensive care unit, she was quite familiar with both intravenous Diflucan and Diprivan. When a glass bottle containing an opaque liquid arrived instead of the plastic bag containing a clear solution that she expected, she thought that something might be amiss.

As she was about to telephone the pharmacy for clarification, a physician demanding her immediate assistance with another patient distracted her. Several minutes later, when she re-entered the room of the leukemia patient, she forgot what she had been planning to do before the interruption and simply hung the medication, connecting the bottle of Diprivan to the patient’s subclavian line.

The patient’s IV pump alarmed less than one minute later due to air in the line. Fortunately, in removing the air from the line, the nurse again noted the unusual appearance of the “Diflucan” and realized that she had been distracted before she could pursue the matter with the pharmacy. She stopped the infusion immediately and sent the bottle back to the pharmacy, which confirmed that Diprivan had mistakenly been dispensed in place of Diflucan.

The patient experienced no adverse effects—presumably he received none of the Diprivan, given the air in the line, the infusion time of less than a minute, and the absence of clinical effect (Diprivan is a rapidly-acting agent). Nonetheless, the ED and pharmacy flagged this as a potentially fatal medication error and pursued a joint, interdisciplinary root cause analysis, which identified the following contributing factors:

(i) Nearly 600 orders of medication labels are manually prepared and sorted daily; (ii) Labels are printed in “batch” by floor instead of by drug; (iii) The medications have “look-alike” brand names; (iv) A pharmacy technician trainee was working in the IV medication preparation room at the time; and (v) The nurse had been “yelled at” the day before by another physician—she attributed her immediate and total diversion of attention in large part to her fear of a similar episode.

(AHRQ, 2004)

Introduction

The case study you just read is highly representative of an ongoing problem in medicine: the limitations of human performance in a cognitively demanding operational environment.

Although this case did not result in the death of the patient, hundreds and even thousands more instances of medication errors do. In this particular case, the fallibilities of cognitive performance were identified in the root cause analysis (RCA) report. Specifically, the event was broken down into the following chain of causal factors: 1) the inability to keep track of multiple medication labels, 2) assignment of the (batched) labels by floor instead of by drug type, 3) similarity in brand names of drugs, 4) a pharmacy technician trainee who was not very experienced, and 5) a nurse who was “yelled at” by a physician the day before, causing her to lose attention for fear of a repeat episode.

This paper addresses the cognitive aspects of human performance in medicine. Most of the time, the last person in a chain of errors is assigned the blame for the final outcome of a procedure gone wrong. In the case of medicine, this is usually the physician, surgeon, anesthesiologist, or other caretaker who assumes primary responsibility for a patient’s safety. This assignment of blame, however, does not acknowledge that there may be many other overt or latent “links in the chain” influencing the final outcome, including organizational culture and regulatory policy. This is an area that will be addressed in a separate and more detailed treatise in the near future. For now, the focus will be on the cognitive factors that the caregiver, as the “last line of defense” in the prevention of medical errors, must understand.

Human factors (HF) addresses, among other things, the interaction between people and people, and between people and their environment. HF is a multidisciplinary field comprised of a variety of disciplines, including psychology, physiology, anthropometry, biology, and various engineering sciences. With that in mind, HF will be the foundation of this discussion, as it provides a solid model for human performance and the peripheral cognitive features that are the basis of this paper.

Human factors models

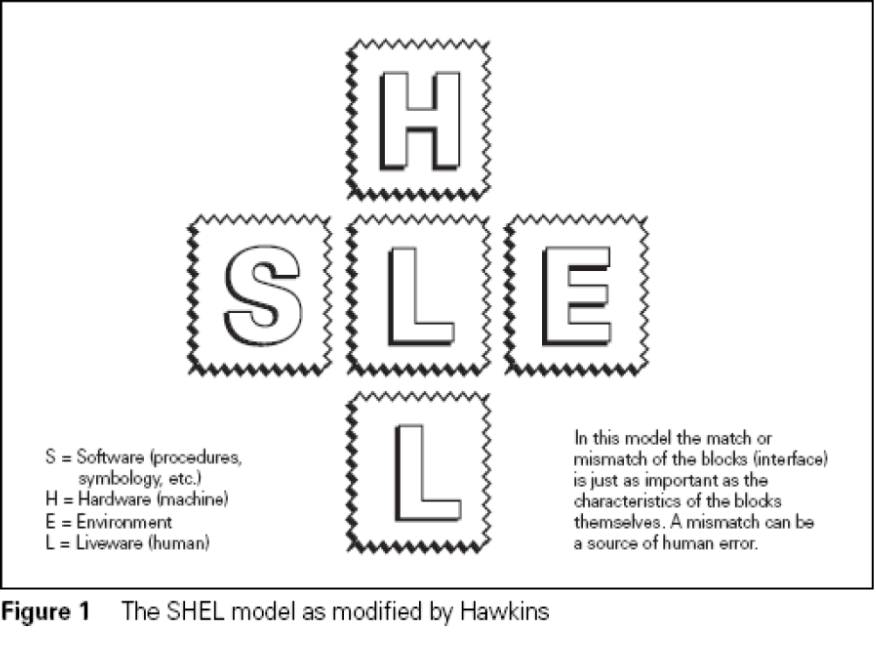

Two important models can help visualize the concepts of HF. The first is called the SHEL model (Edwards, 1972; see Figure 1). The acronym SHEL stands for Software, Hardware, Environment, and Liveware. For clarification, Liveware, in this context, refers to humans. Hawkins (1984) further modified the SHEL model by adding an additional “L” (SHELL). The addition may appear to be somewhat redundant at first glance since it is simply a repetition of Liveware. However, it was added to represent the interactions of humans working with other humans. Its emphasis is important because significantly more accidents occur as a result of deficiencies in person-to-person work, compared to factors such as errant machines, computers, airplanes, medical devices, nuclear power plants, etc.

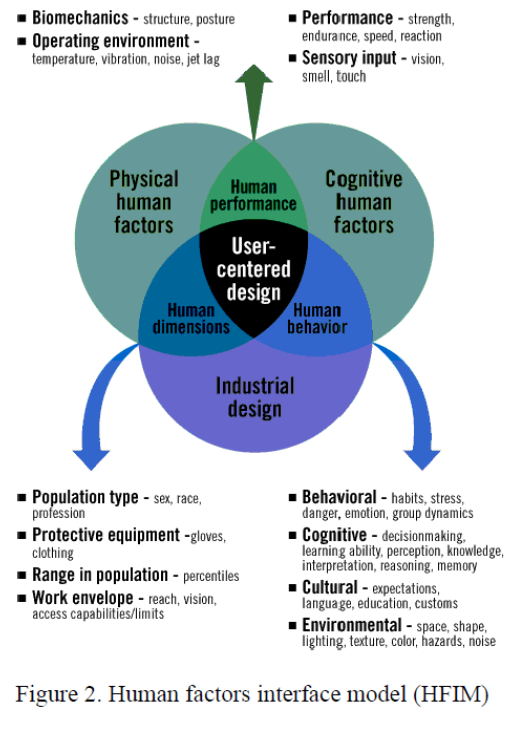

The second model is called the human factors interface model (HFIM). Similar to the SHELL model, HFIM shows the relationship between the human (who is at the center of the design) and the peripheral components that affect the human. Notice the overlap of all the components, affecting not only each other but also the human in the center of the model (Figure 2). For the purpose of this paper, an emphasis will be put on the right side of the model to specifically include cognitive human factors and human behavior.

With a picture of human factors beginning to take shape, we now shift to error classification. Errors can range from benign “slips of the tongue” to blatant violations leading to catastrophic outcomes, with a variety of types in between. Credit must be given to James Reason (1990) for his contribution to an error typology that has been used in HF systems and training programs throughout the world. Reason’s error classification list includes the following:

- Errors of omission. Not performing an act or behavior (simply failing to do it).

- Examples: A delay in performing an indicated cesarean section results in a fetal death; a nurse omits a dose of a medication that should have been administered; a patient suicide is associated with a lapse in carrying out frequent patient checks on a psychiatric unit. Errors of omission may or may not lead to adverse outcomes (The Joint Commission, 2005).

- Errors of commission. Substituting an incorrect act or behavior for the correct one.

- Examples: A drug is administered at the wrong time, in the wrong dosage, or using the wrong route; a surgery is performed on the wrong side of the body; a transfusion occurs involving blood cross-matched for another patient (The Joint Commission, 2005).

- Execution-based failures, also commonly termed lapses, trips, or fumbles. They may be skill-based (an error in a repetitive task performed over and over) or rule-based (an error caused by failing to properly follow established rules).

- Examples: “Freudian slips” and “slip of the tongue”; a person signals he is turning left when he actually intends to turn right; a physician addresses a patient by the wrong name; an ED doctor orders an x-ray when she means to order an

- Higher-level failures. The actions may go entirely as planned, but the plan itself is not adequate to achieve its intended outcome. Mistakes may be rule-based (when a rule, though correctly followed, is itself incorrect or wrong for the task) or knowledge-based (when no “pre-packaged” rules exist, forcing a caregiver to work out a solution from first principles—a course of action that, due to healthcare’s dynamic environment, is highly error-prone).

- Examples: A surgeon performs a “textbook” amputation but does so on the wrong patient; a surgeon encounters excessive bleeding during a laparoscopic procedure and works from first principles to control the bleeding, possibly exacerbating the problem and setting the stage for additional errors.

- Intentional deviations from safe operating procedures,

recommended practices, rules, or standards. Violations can be further subcategorized as routine (repetitive and automatic) or situational (noncompliance committed simply in order to get the job done).

- Examples: Driving 80 mph in a 55 mph speed zone (routine violation); skipping steps in a complex process in order to finish quickly because of time pressure (situational violation).

Cognition

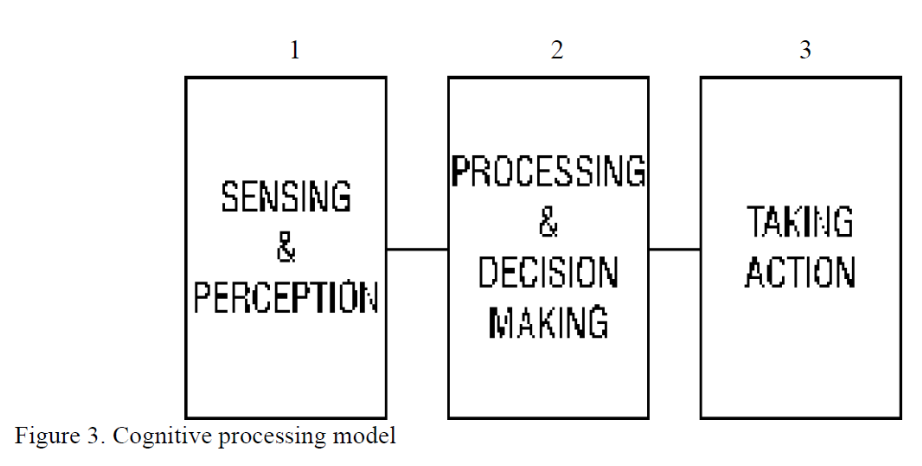

Cognition refers to an individual’s mental processes. These processes can be broken down into three distinct phases: 1) sensing and perception, 2) processing and decision-making, and 3) taking action. This is a three-step, sequential cognitive processing model as shown in Figure 3.

Cognition is an integrated process that involves memory, attention, perception, action, problem solving, and mental imagery. In fact, when looking at the myriad of internal and external influences that might be apparent in each of the three phases, it becomes evident that cognition is quite complex. For example, in the sensing and perception phase, errors may occur due to inadequate lighting, excessive noise, poor-quality manuals, and ambiguous or poorly written orders or prescriptions. Processing and decision-making errors might be due to fatigue, inadequate training, or time pressure. Errors in taking action may be caused by poor equipment design, inadequate procedures, distractions, or extreme workplace temperatures (such as the cold temperatures frequently encountered in the OR or ICU).

Medicine may be one of the most cognitively demanding careers a person can choose. Although much has been learned from the aviation industry in terms of high-risk complex cognition on the job, the cockpit is a mere speck in comparison to the complexities of medical assessments and procedures. Unlike aviation, where for the most part there is at least some time for information processing, many life-or-death medical decisions need to be made in a split second.

The quality of these decisions is based on, and influenced by, the cognitive processing model. This process is analogous to a human computer. Massive amounts of stimuli are sensed by the human senses, such as sight, sound, and touch (inputs). Different perceptual thresholds are common among people, and it is not uncommon for individuals to see the same thing differently or even not at all. This may not be due to a cognitive deficiency, but instead could be based on such things as culture, age, gender, bias, or past experiences.

From there, the information is processed by higher-level brain functions to make sense of the inputted information. Memory is an extremely important part of the processing and decision-making phase. Memory is the core of most cognitive processes; without it, a person would be extremely limited in function. Yet human memory is highly fallible and subject to error (Baron, 2004). The two types of memory that people are most familiar with are short-term memory (STM) and long-term memory (LTM). STM is problematic in areas such as remembering verbal instructions or orders, particularly when there are numerous distractions (such as in the ER). You may know that people “chunk” information into pieces in order to remember it. Humans tend to process information most effectively when it involves approximately seven (plus or minus two) bits of information (Miller, 1956). Beyond this number, accurate short-term recollection becomes difficult.

LTM is also an extremely important part of the processing phase. Because many decisions are based on past experiences, LTM can be thought of as a storage area for global information. From this information, people are able to use schemata or templates to solve a current problem. Obviously, the more experience a person has, the more templates will be available. The downside of LTM is that there may be problems in retrieving the data at the particular moment that it is required. The retrieval problem can be caused by pathological factors, environmental variables, the use of medication or prescription drugs, or simply a decline in memory retrieval as a function of normal aging. This incidentally raises the controversial question of whether you, as a hospital patient or an airline passenger, would feel safer in the hands of an older surgeon or pilot, respectively. With age comes experience, but at what point is a doctor or a pilot not able to perform safely and effectively? An airline pilot, by regulation, must retire at age 65. There is no mandatory retirement age for a doctor.

Of course, memory is not the only ingredient in the processing and decision-making phase. Many other factors, such as fatigue, stress, and traumatic life events, can have a profound effect on a person’s decision-making ability.

The final phase of the cognitive processing model is the action that is taken (the output) after processing has occurred. Assuming a proper decision has been made, it is still possible that an execution error can occur in this final phase. The surgeon not having the proper equipment for the task may hamper a well-intentioned act. One of the best examples can be found in laparoscopic surgery, which is less invasive with a much faster recovery time than traditional surgery. However, this procedure is also less accommodating for the surgeon. Compared to an open procedure, the surgeon does not have direct visual contact with the surgical site. Instead, he or she must look at a video monitor to see what is taking place. Additionally, the surgeon lacks the tactile feedback of open surgery due to the length of the shaft of the surgical instruments (Matern, 1998, 1999, 2001, cited in Bogner, 2004, p. 79). Finally, the limited movement of the video camera and instrument forces the surgeon into unnatural and uncomfortable body postures that can affect the outcome of the operation (p. 79).

Laparoscopic surgery can be classified as a fairly risky procedure, due in part to the problems listed above. This example shows where the right plan can go wrong during the taking action or execution phase.

Bob Baron, PhD, is the president and chief consultant of The Aviation Consulting Group in Myrtle Beach, South Carolina. He has been involved in aviation since 1988, with extensive experience as a pilot, educator, and aviation safety advocate. Dr. Baron uses his many years of academic and practical experience to assist aviation organizations in their pursuit of safety excellence. His specializations include safety management systems and human factors.

References

Agency for Healthcare Research and Quality. (2004). Caution: Interrupted. Morbidity and Mortality Rounds on the Web. Retrieved from https://psnet.ahrq.gov/web-mm/caution-interrupted

Baron, R. (2004). Pilots and memory: A study of a fallible human system. Retrieved from http://www.vipa.asn.au/sites/default/files/pdf/Pilots and Memory.pdf

Edwards, E. (1972). Man and machine: Systems for safety. In Proceedings of British Airline Pilots Association Technical Symposium (pp. 21–36). London: British Airlines Pilots Association.

The Joint Commission. (2005). Sentinel event glossary of terms.

Matern, U. (1998, 1999, 2001). The laparoscopic surgeon’s posture. In M. S. Bogner (Ed.), Misadventures in Healthcare: Inside stories (pp. 75–88). Mahwah, NJ: Lawrence Erlbaum Associates.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63, 81–97.

Reason, J. (1990). Human error. Cambridge: Cambridge University Press.