Technology: Choosing the Right Vendor for Incident Reporting

September / October 2012

![]()

Technology

Choosing the Right Vendor for Incident Reporting

Selecting the right vendor is like dating: once you find “the one,” you want to make it a long-term partnership. And much like in dating, if you choose a vendor whose vision and behavior does not align with yours, you both can feel stuck in an unhappy relationship.

|

Trinity Health is one of the largest Catholic healthcare systems in the United States, with 49 acute-care hospitals, 432 outpatient facilities, 32 long-term care facilities, and numerous home-health offices and hospice programs. Trinity Health operates in 10 states and draws on a rich and compassionate history of care that has lasted more than 140 years. |

In 2009, it became apparent that the way we were tracking incidents at Trinity Healthy was not optimal. We set out to find a new incident reporting system from a vendor whose mission matched ours. At Trinity Health, our culture and operating model are focused on how we can create a superior patient care experience. Unfortunately, we knew that with more than 500 sites, gaining consensus on what system would help us achieve that goal would be a challenge. We need a concrete plan to find “the one.”

Outlining the Process

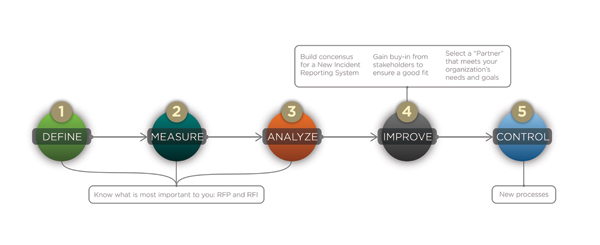

The process we chose is based on the DMAIC (Define, Measure, Analyze, Improve, Control) methodology, a roadmap for problem solving and process improvement developed by the quality improvement model Six Sigma (Figure 1). At Trinity Health, we applied the DMAIC methodology to choosing a vendor for our new incident reporting system in this way:

- Define what’s important to you.

- Measure how it’s important to you.

- Analyze how your current processes should change.

- Improve by building consensus and gaining buy-in.

- Control how the new system is implemented.

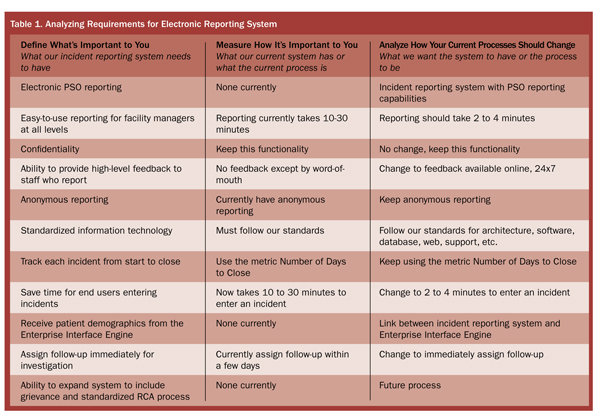

First, we outlined a few basic features that our electronic reporting system needed to have. These were non-negotiable: without them, the system wouldn’t make it past the request for information (RFI) stage. Next, we described our current process for tracking incidents, and how we wanted it to change. This helped us easily compare different systems and measure tangible features and intangible benefits (Table 1).

Improving the Process: Building Consensus and Gaining Buy-In

Trinity Health assembled a steering committee of “champions” who engaged other teams to build consensus on what we needed in our new incident reporting system. This was an important step: failure to gain buy-in from stakeholders can mean choosing a partner that does not meet your organization’s needs and goals. It is essential to ensure a good fit, or user adoption of the new system will suffer.

The core team held weekly status meetings over the length of the project where we reviewed project milestones and tracked them as complete, in progress, or not started. At each meeting, the team reviewed action items in detail, determining who owned each action item, its due date, and status. Critical issues and risks that could affect the project outcomes and/or timelines were also identified.

With this process defined, Trinity moved on to sending out an RFI for a system based on the criteria indentified in the previous stages. After receiving the RFI responses, the next step was to assemble a request for proposal (RFP) that included our system requirements (the concrete, quantifiable things we identified in the initial phases plus anything from the RFI responses that needed additional explanation) and the end-user requirements (the qualitative requirements).

Narrowing Down the Field

From the RFP responses, we chose several potential vendors who we were interested in seeing live demonstrations of their systems. Before going on our “first date,” we chose to talk to others to find out if the vendor would be a good fit for Trinity Health. For us, that meant talking to trusted organizations that endorsed the vendor (such as the American Hospital Association) and end users, project managers, and risk managers at other hospitals that use the system.

To keep track of all of the vendors, we used a scorecard to measure the customer responses as qualitative data. Some of the questions we asked included:

- What specifically caused you to choose this vendor?

- What is your experience with the vendor’s customer service/technical support?

- What does your staff like best/least about the vendor?

- Have you added facilities since you implemented this system? What is the experience on-boarding a new facility to this system?

Once the vendors passed that test, we invited them to present demonstrations of their system. Again, we used scorecards to evaluate each system’s functionality. Using a scorecard for team voting helps to remove bias and makes it easier to make a group decision. In addition to that score, the selection team used qualitative and quantitative data to make a final decision, identifying each vendor’s strengths and weakness. We evaluated each vendor’s system for the following capabilities:

- Ease of use

- Workflow/alerts

- Reporting

- Security/system administration

- Technology

- Training & implementation

- Future capabilities (root cause analysis and grievance)

At the live demo, we asked each vendor to demonstrate how its system will help enable our day-to-day processes. We asked them to demonstrate—in the actual software—how to accomplish specific tasks.

Example: Trinity Health has just issued a safety alert about a Brand-X infusion pump. An executive at the hospital calls the risk manager and asks for a report on all infusion pumps with a list of all events reported related to this type of pump. He also wants to know what our rate of error is related to the pump settings.

Each vendor was required to show us how to run this report in its respective systems, so we could compare the relative ease of completing this type of daily task. If only dating were that transparent!

Choosing Our Vendor and Controlling Implementation

Finally, we made our decision by comparing scores against each other and looking at all of the qualitative and quantitative data. Because we followed a defined DMAIC process, one vendor was the clear winner in the end. The final step in the process is to ensure that the system is implemented in a way that you’re comfortable with and that matches the process outlined in the vendor’s statement of work.

By deciding early in the process what was important to us and what we wanted to change, building consensus and gaining buy-in, selecting a vendor for our incident reporting system was relatively easy. We had found “the one.”

Joyce Zerkich is a project manager at Trinity Health. She has 20 years of experience focused on improving enterprise information technology delivery by means of strategic planning, risk management, security, change management, website development, EMR development, and program/project management. Joyce is also a contributing author to the HIMSS-published book, “Implementing Business Intelligence within HealthCare,” and a frequent speaker at healthcare technology conferences. Zerkich can be reached at zerkichj@trinity-health.org.

|

Trinity Health uses RL Solutions’ RL6:Risk adverse event management software for electronic incident reporting, which has earned the exclusive endorsement of the American Hospital Association. |